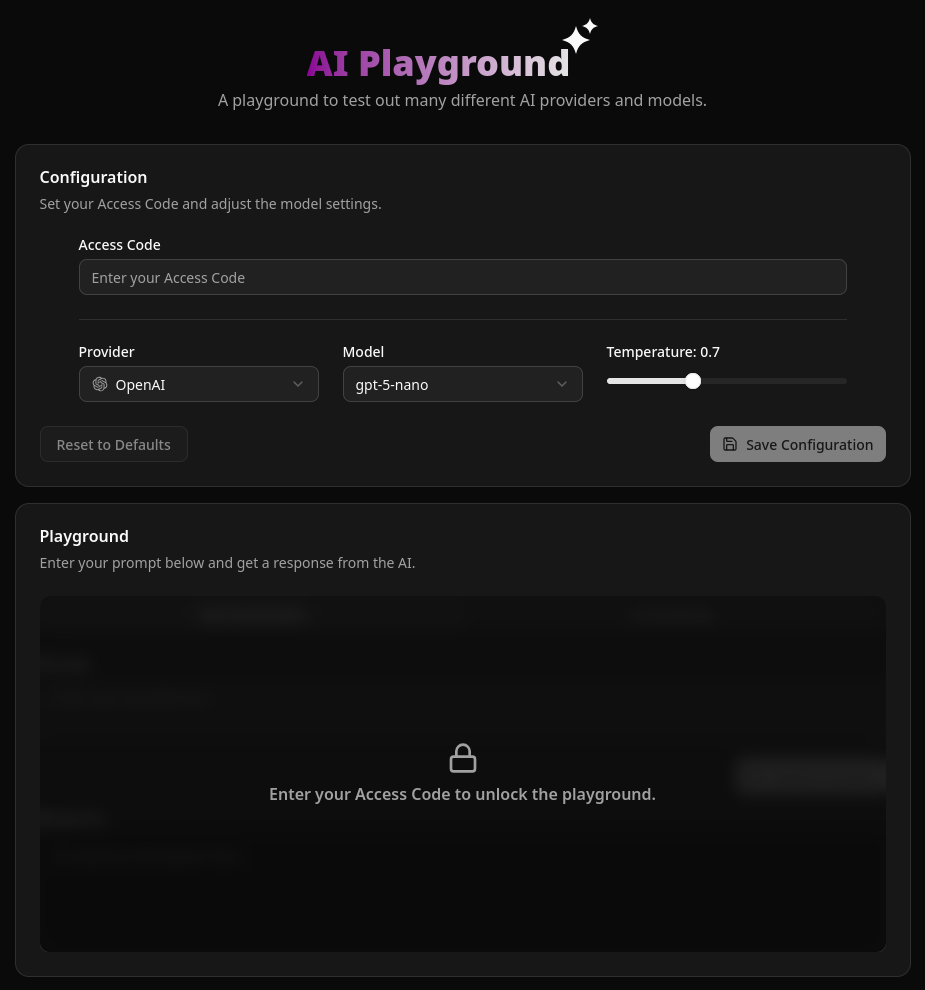

This project is a “clean-room” implementation of the core architecture from a proprietary, NDA-protected commercial project I led. I built the AI Model Playground from first principles to demonstrate my end-to-end capabilities in designing, building, and deploying a modern, cloud-native application.

It is an interactive web application that allows users to select, configure, and test text generation and embedding models from multiple providers, such as Gemini and Anthropic.

The Decoupled Architecture

- Backend: A containerized FastAPI (Python) application deployed on Google Cloud Run. It serves a REST API and manages all AI provider logic and abstraction.

- Frontend: A modern Next.js (React) and Shadcn/UI application deployed on Vercel. It consumes the backend API and manages all client-side state using React Context.

- Database: A serverless PostgreSQL database persists all application settings, such as the current model and temperature.

Key Features & Technical Highlights

- Dynamic Provider Management: The backend uses an abstract

LLMProviderbase class, allowing new AI providers to be added in a “plug-and-play” manner. - Stateful Configuration: User-defined settings are saved to the Postgres database and globally managed on the frontend with a React Context.

- Live AI Testing: Users can send test prompts directly to the configured model and receive real-time responses from the selected provider.

- Embedding Generation: For supported providers like Gemini, users can generate and view vector embeddings for any given text input.

- Secure Endpoint Access: API routes that interact with paid AI services are protected, requiring a valid Access Code to prevent unauthorized use.